What is database sharding?

To build high-performing applications — especially those leveraging AI or serving millions of users — engineering leaders must ensure their systems can scale efficiently without compromising reliability or speed. Database sharding (distributing data horizontally across multiple servers) is often thought of as a solution. However, it introduces operational challenges, increases complexity in deployments, maintenance, and queries.

Before adopting sharding, it’s important to consider performance-boosting alternatives that are cheaper and faster to implement. These benefits are crucial for technical decision-makers and CTOs who must balance long-term scalability with short-term development velocity and operational simplicity.

At Lazer, we’ve helped numerous clients dramatically improve database performance and eliminate bottlenecks — without jumping straight to sharding.

Through targeted, low-overhead strategies, we’ve delivered faster results while keeping systems simple and maintainable.

These solutions strike the right balance between long-term scalability and short-term development velocity — exactly what today’s CTOs and technical leaders need.

Let's walk though 8 strategies we've used and how to implement them in your own database.

Denormalization

When learning SQL we’re taught to normalize databases — splitting data into logical tables to avoid data redundancy and ensure consistency. But sometimes, breaking the rules helps: denormalization is a valid way to speed up complex queries by reducing joins.

A rule of thumb is lean toward normalization if you want to speed up write-heavy queries and use denormalization for read-heavy queries. In the following example, we denormalize and repeat user data in the orders table to avoid doing an extra join lookup on the users table.

CREATE TABLE users (

id SERIAL PRIMARY KEY,

name TEXT

);

CREATE TABLE orders (

id SERIAL PRIMARY KEY,

user_id INTEGER, /* Denormalized from users table */

user_name TEXT, /* Denormalized from users table */

product TEXT,

amount DECIMAL

);- When to use it: Good for read-heavy workloads with expensive joins.

- Tradeoffs: Denormalization increases the number of update paths — changes to user data now must be updated in multiple places. This can lead to inconsistencies if not managed carefully.

- When to stop: If you're spending more time syncing denormalized data or debugging consistency issues than you are speeding up reads, it's time to rethink denormalizing.

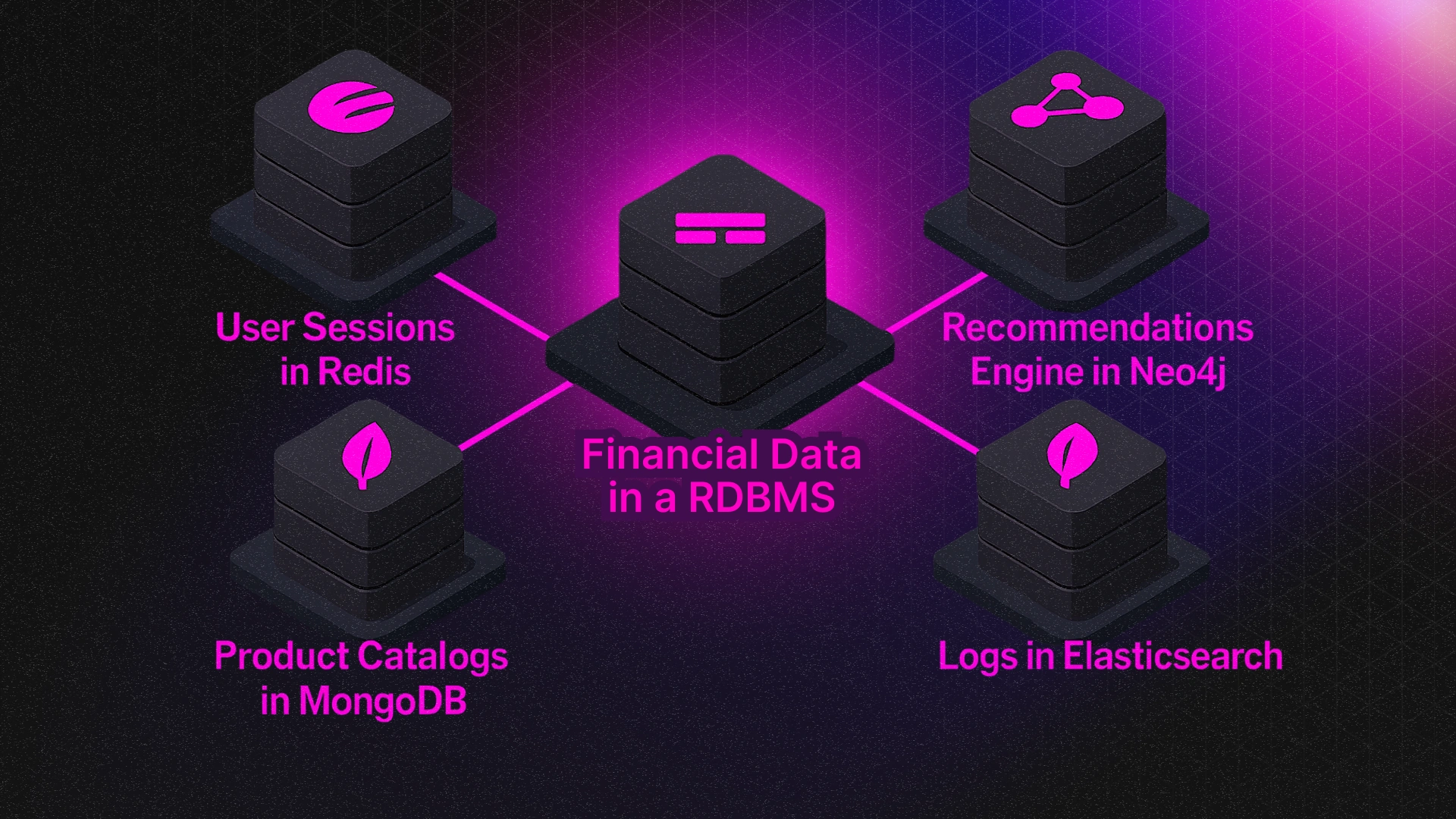

Polyglot persistence

It’s typical for an inexperienced software development team to use one database, usually PostgreSQL, for all their needs, forgetting to use the best tool for the job. Once an application hits a certain level of growth, it’s better to use different databases for different jobs.

Let's explore a few example jobs:

- Text search: Relational databases are not the best for text search, therefore if we have complex string search queries, it’s best to use a database that excels at that job. Elasticsearch or MongoDB Atlas Search (both based on Lucene) are great for complex full-text search requirements.

- Caching: We can offload expensive and frequently repeated queries into in-memory stores such as Redis or Memcached. Memory stores are also great for storing session data, which is frequently fetched with every authenticated request.

- Analytics (OLAP): For analytical queries over large datasets, consider columnar databases (Cassandra, BigQuery) or time-series databases (TimescaleDB, an extension for PostgreSQL).

- Graph/relationship Data: For complex relationships data such as social networks, recommendation engines, and mind-mapping tools, graph-based databases such as Neo4j or ArangoDB are more efficient for storing and describing this type of data.

- Ledger data: Due to compliance or a business requirements, we may need to store data that can’t be updated, changed, or deleted, only inserted. In these cases, a ledger database such as TigerBeetle and ImmuDB are the perfect match. Some typical use cases are logs, auditable information, or accounting records.

Tradeoffs: Introducing more systems increases operational overhead, monitoring complexity, and developer learning curves.

Tip: Use polyglot persistence once a single database starts to bottleneck on a specific access pattern or use case (e.g. full-text search or massive analytical queries).

Indexing

Creating indexes is one of the best way to improve query performance as it avoids full table scans. Database indexes function the same as a book index: when we need to find something in a book, we can use the index to quickly find the page that contains the information we want to read.

Imagine we have a table with all the purchases made by users of a commerce website. It could have millions of rows containing every purchase made by past users.

CREATE TABLE purchases (

id BIGSERIAL PRIMARY KEY,

user_id BIGINT NOT NULL,

product_id BIGINT NOT NULL,

amount NUMERIC(10, 2) NOT NULL,

purchased_at TIMESTAMPTZ NOT NULL DEFAULT NOW()

);Now we want to find the users who purchased products in the last month.

If we try to do such a query without any index, the database would need to do a full table scan, scanning row by row to get the answer. If we use an index,we can avoid the full table scan and quickly find users who purchased something in the last month.

CREATE INDEX index_purchased_at

ON purchases (purchased_at, user_id);Tradeoffs: Indexes increase write overhead and consume disk space. Over-indexing can slow down insert/update operations and bloat your database.

Tip: Prioritize indexing for frequently queried fields, and monitor usage with tools like pg_stat_user_indexes in Postgresql, or db.myCollection.aggregate([{ $indexStats: {} }]) in MongoDB.

Index-only scans or covered queries

Another strategy is the index-only scan in PostgreSQL, or covered queries in MongoDB. When a query can be satisfied entirely by using an index, the query planner can avoid the extra trip into the table heap and just extract the information directly from the index, acting effectively as a cache. In this case we can get blazing fast queries.

CREATE TABLE users (

id SERIAL PRIMARY KEY,

name TEXT,

email TEXT,

);

/* Create an index covering all columns needed by the query */

CREATE INDEX idx_users_session ON users (id, name, email);With the above index we can cover typical queries, such as session queries, without having to rely on a memory database.

Design patterns

We can use data design patterns to help us organize and speed up the performance of our queries. Some examples are:

- Archive pattern: If our queries mainly involve the most recent or newer items, we can move older data into a different table or storage. Examples include just keeping comments posted by users in the last 6 months, or the financial data from the last year, and moving everything else into an archive.

- Attribute pattern: This involves grouping similar fields into a sub-document or array. It’s particularly useful when dealing with documents containing many fields, but only a subset are frequently queried (e.g., product specifications with varying attributes). By organizing data this way, you can reduce the number of required indexes.

- Imagine we have an commerce storefront focusing on video games, where each game can have multiple

attributes. We can nest all theattributesunder an array and index it. This allows us to avoid having one index per attribute, speeding up index creation and maintenance.

- Imagine we have an commerce storefront focusing on video games, where each game can have multiple

{

"_id": "123",

"name": "Mario Bros",

"price": 1500,

// nest attributes in an array which will be indexed

"attributes": [

{ "key": "category", "value": "family" },

{ "key": "platform", "value": "Nintendo Switch" },

{ "key": "multiplayer", "value": true },

{ "key": "age_rating", "value": "E (Everyone)" },

{ "key": "developer", "value": "Nintendo" },

{ "key": "release_year", "value": 1983 },

{ "key": "genre", "value": "Platformer" }

]

}- Computed pattern: Instead of calculating resource-intensive values during read operations, this pattern stores pre-computed results in the document (e.g., total order value or total sales in a month). The data is updated only when needed, shifting computational overhead away from reads.

Vertical scaling

Sharding is achieved through horizontal scaling but involves a significant investment in developer time and operational costs. Initially, a quicker and cheaper alternative is gradually improving your hardware — vertical scaling — as much as it is cost effective. Just be careful, after the first few hardware upgrades, vertical scaling becomes prohibitively expensive, which will be a good indication to consider horizontal scaling via sharding.

⚡️ When to use it: Early-stage systems with modest load spikes or limited engineering bandwidth.

‼️ Limits: Vertical scaling quickly hits diminishing returns (and budget ceilings). When your biggest instance can’t keep up, it’s time to consider replication or horizontal strategies.

Read replicas

Using read replicas, we can offload read queries into our read-only replicas in a database. Replicas also give the extra benefit of resilience and data redundancy in case we lose our main or primary database.

In MongoDB, we can tell the cluster to serve all read queries through the secondary replica nodes, letting the primary node focus on write operations. This can be easily achieved through:

// All operations read from the secondary members of the replica set.

db.getMongo().setReadPref('secondary');

⚡️ Best for: Read-heavy applications, analytics dashboards, or apps with traffic spikes.

‼️ Limits:

- Replication lag can cause stale reads.

- Write traffic still goes to the primary, which can remain a bottleneck.

Partitioning

Partitioning is the process of breaking down a table into smaller, more manageable tables. Let’s return to the purchases table we described above, if we wanted we could partition the table by years or months, giving us a fast way to ignore older data.

CREATE TABLE purchases (

id BIGSERIAL,

user_id BIGINT NOT NULL,

product_id BIGINT NOT NULL,

amount NUMERIC(10, 2) NOT NULL,

purchased_at TIMESTAMPTZ NOT NULL DEFAULT NOW(),

PRIMARY KEY (id, purchased_at)

) PARTITION BY RANGE (purchased_at);We would then create the monthly partitions, allowing us to focus on more recent data.

CREATE TABLE purchases_2025_01 PARTITION OF purchases

FOR VALUES FROM ('2025-01-01') TO ('2025-02-01');

CREATE TABLE purchases_2025_02 PARTITION OF purchases

FOR VALUES FROM ('2025-02-01') TO ('2025-03-01');

⚡️ When to use it:

- Your queries focus on a subset of the data (e.g., “last 30 days”, “sales by region”).

- Your tables exceed hundreds of millions of rows.

🔄 Tradeoffs:

- Increased schema complexity.

- Poorly chosen partition keys can make things worse (e.g. all writes hitting the same partition).

Materialized views

For data that is slow, computationally expensive to query, and doesn't need to be constantly updated, we can use materialized views. A typical business requirement is needing to see the total sales per month. Instead of querying all the of rows, we could precompute this into a materialized view:

CREATE MATERIALIZED VIEW monthly_sales AS

SELECT

DATE_TRUNC('month', sale_date) AS month,

SUM(amount) AS total_sales

FROM sales

GROUP BY DATE_TRUNC('month', sale_date);And update the materialized view only when needed, perhaps once a month:

REFRESH MATERIALIZED VIEW monthly_sales;⚡️Best for:

- Dashboards, reports, pre-aggregated metrics

🔄 Tradeoffs:

- Needs manual or scheduled refresh

- Adds storage overhead

When should you consider sharding?

Eventually, all the above techniques may hit their limits. Here are signals that sharding might be the right next step:

✅ You're running on the biggest instance available to your budget and still seeing slowdowns.

✅ Your write throughput exceeds what a single machine can handle

✅ You're hitting storage or table size limits.

✅ Your dataset naturally segments by tenant, region, or another shardable key.

Which database performance strategy should you use?

Here’s a high-level checklist to guide you:

- Are queries slow?

- Add indexes or use index-only scans

- Are you seeing expensive joins?

- Try denormalization or computed fields

- Is your schema too rigid or inconsistent?

- Use design patterns like attribute or archive

- Are certain access patterns unique (e.g. search, analytics, text search)

- Use polyglot persistence

- Still slow after optimization?

- Add read replicas or vertical scaling

- Are tables too large to scan efficiently?

- Use partitioning

- Is aggregation expensive and infrequent?

- Use materialized views

Exhausted all above options? Now it’s time to shard!

We're here to help with your strategies or database needs

As we've shown, there are many high-impact strategies you can implement to dramatically boost database performance without taking on the complexity and overhead that comes with sharding. From denormalization and polyglot persistence to smarter indexing and materialized views, these techniques can help your systems stay fast, reliable, and scalable, while keeping your architecture maintainable.

At Lazer, we’ve helped startups and enterprises alike navigate these exact challenges, unlocking performance gains, unblocking teams, and avoiding unnecessary architectural complexity.

We can help you find the fastest path to scale — without sacrificing developer velocity or operational simplicity.